>>> Click here to access this episode of the Syllab Podcast on Spotify <<<

a) An assembly job

The Cambridge dictionary defines a smartphone as “a mobile phone that can be used as a small computer and that connects to the internet” and Merriam-Webster as “a cell phone that includes additional software functions (such as email or an Internet browser.” Both completely miss the point in my opinion. First, there is a lot more in the device than just a phone which can be carried around and connects wirelessly to the internet as far as hardware go; sensors and the camera system are an integral part of smartphones nowadays and a manufacturer would not be selling many units without those onboard. Second, the presence of so many different hardware co-existing on one device, all subjected to a single operating system and made accessible to a vast ecosystem of software applications provides smartphones with functionalities that non-smart mobile phones or even computers do not have. Case in point, the snapping of pictures and their immediate sharing via a messaging app or on a social network platform. No judgment here on whether this is a positive development. Accordingly, a better definition would be “the joining together of several sensors, input and output devices around core mobile phone capabilities controlled by a single operating system from which new functionalities emerge through the use of applications developed by third parties and the portability aspect of the hardware.”

A little long but also a much better description of this device that really is more than the sum of its parts and has had such an impact on our lives, not just the way we interact with content and data but also with each other. Yet, for the purpose of this chapter I will not go into the behavioural and social aspects, nor the diverse nature of the applications available out there and, instead, I will stick to the individual components so we can truly appreciate smartphones really have not invented much, they simply forced a miniaturization of existing electronics, and their success is down to convenience, the co-existence of those varied electronic components and new use cases. It so happens that most of the individual components were covered in previous chapters of this fourth series so, as I did in the introductory section for the television (S4 Section 8.a), I will provide the relevant internal links and keep comments at a high-level except when more smartphone-specific explanations are called for, an example of this being microchips.

The very concept of integrated circuits and microprocessors, including their manufacturing process, was the topic of Chapter 1 and, for smartphones, the notion of integration was pushed even further in the form of “systems on a chip” (SoC) embedding not just a CPU (S4 Section 2.a), typically using ARM architecture (S4 Section 3.b) but also cache memory (S4 Section 2.b), sometimes a GPU (S4 Section 1.e), a modem, a neural processing unit (NPUs) and other modules allowing for connectivity, whether wireless or via inputs and output devices. Side note here that NPUs are also called AI accelerators because their memory usage is optimized for certain tasks falling into the fashionable bucket of “AI” and they use lower precision arithmetic in their computations, thus increasing computing throughput (I included a link to the Wikipedia entry for system of a chip at the end of this chapter if you wish to learn more). The reason why SoCs have evolved in smartphones is primarily efficiency: in power consumption, in simplified design, and in terms of spatial footprint.

One chip and one operating system to provide the management of resources and the interface with all the various native applications downloaded over time and those accessed through the web – refer to S4 Section 6.c to understand the exact nature of the web. We covered operating systems (and applications) in S4 Section 3.c and the dominant ones, at the time of this writing, are unarguably Android and iOS; they conveniently come pre-installed and updates are regularly made available to provide additional features, fix existing bugs and patch known security vulnerabilities. As mentioned earlier though, the magic of smartphones is not only in the hardware integration but also in the stupendous number of applications that can run on them, making use of sensors at their disposal.

So many applications indeed, to the point where managing our interaction with them becomes a real necessity. In this respect, notifications have become a key feature and, as most users can attest, their aggregate effect can be somewhat overwhelming if not properly managed, not to say irritating. Well, I guess I said it. The underlying communication concept is as follows: the user agrees to receive certain types of notifications, this is equivalent to subscribing to a service, and when updates are available, these are pushed by the server to the relevant subscribers. Alternatively, the application installed on the client side, i.e. on your smartphone will interrogate the server about any potential updates, this is called “polling”.

Smartphones have become for most people the primary means of interacting with internet so, for any online content or service provider, ignore this device at your peril. Mostly they do not and new online services are developed either as native applications or web applications that can be displayed properly on various mobile screen sizes. This is facilitated by frontend technologies (refer to S4 Section 3.e) such as React which queries the screen size and, following pre-programmed instructions, displays content with potentially different layouts accordingly.

Screen format and technology is a key selling point for smartphones, even though a HD resolution of 1280×720 (refer to S4 Section 8.c) or above results in pixel densities in excess of 200 pixels per inch (PPI, an inch is 2.54cm so that is about 8 pixels per millimetre), which is nearing the limit of what the human eye can distinguish – the limit being around 300ppi – and so it remains a bit of a puzzle why some of these screens offer resolutions in excess of this threshold. To deliver such pixel density, the display technology of choice is OLED (refer to S4 Section 8.e) and a lot of the innovation is now shifting away from pure resolution and refresh rates of 60Hz going up to 120Hz depending on applications (the higher the number of frame per second the faster the battery drains so the highest refresh rates are not always made use of) and towards form factors. The two main variations are the flip form with vertically elongated screens folding like a clam shell and sporting a small external screen so the phone can still be used for basic tasks and information checking in its “closed” state, and the foldable version, sometimes complemented by a third smaller screen on the outside, where the two main screens are folded along a vertical rather than horizontal hinge, which offers a screen real estate not far off the smaller tablet versions.

The features of smartphone screens go beyond their form factor, resolution and refresh rates, they are coupled with sensors to detect fingerprints and movements so they can detect, when the technologies work well, touch and even multi-touch (refer to S4 Section 3.d), changes in orientation triggering a toggling between portrait and landscape aspect ratios, and even allow for other gaming and activity functionalities by sensing acceleration. We have not yet looked into this type of sensors and so we will dedicate section b) to gyroscopes and accelerometers.

With all that said, if there is one sensor that reigns supreme in smartphones it is the camera systems. Systems is the word because there is generally more than one lens, including a front-facing one, and a lot of the post-processing happens in the SoC, without consumers having to launch specialized applications if they do not wish to. Looking at some of the most advanced specifications (and this will feel dated pretty fast I expect), the main cameras reach resolutions up to 50MP enabled by a sensor with crop size of about 3x (for reference the Four Third format has a 2x crop and APS-C about 1.6x when compared to a 36x24mm full-frame sensor – refer to S4 Section 7.c), and f-stops as low as f/1,4 (refer to refer to S4 Section 7.a). The main camera may be complemented, on the back side, by an ultrawide lens and a telephoto one offering optical zoom of 4x and more. Genuinely impressive when thinking about the weight and bulk of top-end dedicated camera bodies and lenses. The clincher though, is the fact that we almost always have the device with or near us. There is a saying in the world of photography which is that “the best camera is the one you have with you”, and this in a way does apply to the small form factor of smartphones. It doesn’t have the best camera sensor and lens by any means, yet many of us who say care about their pictures use their smartphones to shoot most of those, because it is there when we need it.

The ability to make payments, browse the web, download applications and even make telephone calls – yes, smartphones can also do that – rely on multiple elements providing this connectivity. The main ones are the radio-frequency transceiver and modem to receive and transmit radio waves to and from the cellular network, the same for Wi-Fi and Bluetooth (S4 Section 5.c) as well as NFC to replicate the use of magnetic credit or debit cards to make wireless payments a few centimetres away from point-of-sale devices and other RFID based detectors (refer to S4 Section 5.e). The current mainstream standards for Wi-Fi are Wi-Fi 5 and Wi-Fi 6 while for cellular telecommunications the fourth generation is dominated by the LTE Advanced and WiMax2 standards (S4 Section 5.b), both using internet protocol and predominantly frequency division multiplexing, especially OFDMA (S4 Section 5.c).

Finally, all of this hardware and computation need to be powered by electrical current so that batteries and related fast-charging or wireless charging technologies have also taken centre-stage rather than their usual more discrete place in other types of consumer electronics. Nowadays, most of the flagship models sport capacities somewhere between 3500 and 4500 mAh (milliampere-hour) and can charge at power levels in excess of 30 watts, provided the charger and the cable also support this. If you wish to revise or learn more about electric current you may want to read S1 Chapter 8 on electrical and magnetic energies as well as S1 Section 6.e. which lists several units of measurement including the watt, ampere and volt. As for battery storage technologies, these will be covered in S6 Chapter 5.

b) Gyroscope & accelerometer

Gyros, in Ancient Greek, means circle or turn, so a gyroscope is an instrument for detecting rotation. Rotation by itself is a form of motion that doesn’t involve an increase in distance between the point that is being rotated and the origin of the frame of reference. Accelerometers on the other hand detect such motion, including the rate of change of velocity, what we call acceleration – and recall that velocity embeds both the concepts of speed and direction, as in vector of motion. Therefore, both are often used together in inertial navigation systems to calculate all of velocity, position and direction of travel purely by dead reckoning, i.e. only using the knowledge of the position of departure and no other geolocation input during the duration of the flight or other mode of travel. It is used in spacecraft, missiles and even submarines, among others.

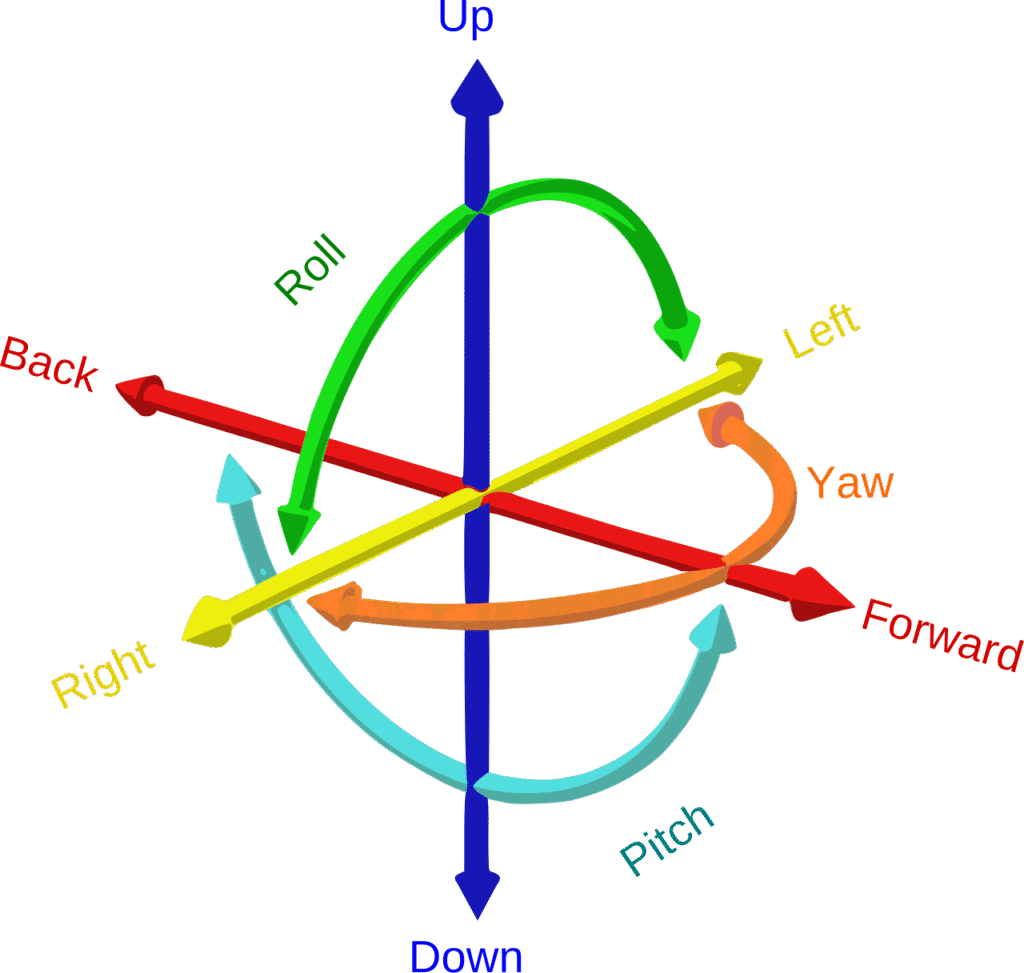

If we consider the 6 possible degrees of freedom offered by three-dimensional space, these can be covered with the gyroscope and accelerometer combination. Figure 5 below is a visual illustration of those six degrees of freedom (6DOF), the list is as follows:

- The linear translations along the three dimensions are forward/backward, left/right, and up/down.

- Rotations can be broken down as a combination of roll (think of standing sideways on a seesaw), yaw (moving your head sideways to say no) and pitch (moving your head along the up-down axis to say yes, at least in most cultures).

Figure 5: Six degrees of freedom

Credit: GregorDS (CC BY-SA 4.0)

To measure rotation along three degrees of freedom, a gyroscope creates three planes of rotation along axes that are perpendicular to each other and, at the centre, a spinning flywheel that will keep its orientation as dictated by the conservation of angular momentum (refer to S1 Section 8.d). This wheel or disc will serve as a reference for measuring the roll, yaw and pitch. I’ll try to provide a brief description but an animated image would be best, you can find some at the relevant Wikipedia entry and I have included the link in the last section for convenience. So, try imagining three concentric discs like the rings of Saturn, the inner one that can roll, the middle one that can pitch and the third one that can yaw. The outer one would be mounted on a stand with an up/down axis of rotation, the second would be mounted on a right/left axis of rotation within this outer ring, and the inner ring would be rotating along a forward/backward axis attached within the middle ring. Imagine pushing this Saturn-like planet gently and all the rings will move in relation with the spinning planet, allowing an observer to make measurements.

For electronics where volume is at a premium, this set up is not workable however so, instead, the computations for rotation are based on the Coriolis effect (refer to S3 Section 3.c) which allows for the computation of angular velocity by knowing the mass and the force applied to a rotating object – so what is left to do is to measure this force to work out rotation.

On the other hand, accelerometers are the tool we use to measure acceleration. Standing still on our Earth inertial frame, it would be 1g or ≈9.8 m/s2 upward along an up/down axis perpendicular to the surface of the planet, which is the reaction force to Earth’s gravity (refer to Newton’s third law of motion, explained in S1 Section 6.d). Note that an accelerometer can only measure acceleration along a single axis so for a multi-dimensional measurement, several of them are required. In fact, we already saw an example of acceleration measurement in biology in the form of the otoliths in our inner ear, which we humans use as part of our vestibular sense (refer to S2 Section 9.d), the one helping us to balance and orientate ourselves.

The simplest mechanical construct is to use a known mass attached to a spring and measure the force exerted on this spring. From there, using the spring constant, it is possible to calculate the force – we will see more about springs in S6 Section 5.d titled “mechanical energy storage”. This type of sensors, when built in our smartphones, can detect sudden accelerations, a possible sign of fall or the phone being dropped, and they will also detect the position in which the handheld device is being held. Other consumer electronics application include video game controllers and pedometers to count steps coupled with GPS inputs to measure distance.

c) The Global Positioning System

Imagine you have two perfectly time-synchronized satellites S1 and S2 sending you a message at the same time which contains their exact position and time of transmission (TOT) and you are able to accurately record their respective time of arrival TOA1 and TOA2 with your own clock or watch.

How would you go about setting the time of your clock and deducing your position? The answer is: you can’t. You need more than 2 satellites. Let’s see how many are required and focus first on establishing our 3-dimensional coordinates.

Knowing TOA1 and TOA2 and the fact that the 2 signals were sent at the same time, we can use the difference TOA1 minus TOA2 (or the opposite) to work out our position on a 3-dimensional hyperbolic surface – if you want to understand this better I have included a link to the Wikipedia entry for hyperbolic navigation in the last section. For example, if they arrive exactly at the same time this would be a special case of a flat plane in between S1 and S2. If you were to add TOA3 from a third satellite S3 you would really see some progress because you can compute three-time difference pairs: TOA1 minus TOA2, TOA1 minus TOA3 and TOA2 minus TOA3, which will leave you with two possible positions. A fourth satellite S4 will allow you, or more probably your GPS device, to determine at which of those two points you currently find yourself.

Once you are geolocated, you can work backward between the TOT and the various TOAs to calculate the time of flight (TOF) and thus recompute the TOAs based on the TOT of the satellites. Your GPS device can then adjust its internal clock to match that of the satellites with atomic clocks onboard, which they also regularly readjust to match the reference atomic clocks on our planet.

Nonetheless, when doing the TOF computations, a few adjustments need to be made, including but not limited to:

- The index of refraction of the atmosphere/ionosphere which slows down light to a fraction under c, its speed in a vacuum;

- If you recall about general relativity (refer to S1 Section 10.a) the mass of the Earth does bend light so this needs to be taken into account; and

- The satellites move with respect to each other and your GPS device. It might only be a few kms/s but this creates a small time-dilation effect (refer to S3 Section 7.f).

Thus, all somebody needs to tell the exact time and her position is reception from 4 satellites in line of sight…

This explains why the Global Positioning System (GPS) owned by the US Space Force comprises a medium-size constellation of about 30 operational satellites at the moment. For consumer usage, the precision is about 5 meters and a different frequency band called L5 provides signals enabling accuracy of down to 2 cm.

So far, three other global alternatives have been developed by the USSR/Russia, the European Union, and China. The Beidou Navigation Satellite System (BDS) operated by the China National Space Administration also has a constellation with about 30 satellites (including 3 geostationary above China) and is fully operational since mid-2020. The Russian Global Navigation Satellite System (GLONASS) is operated by Roscosmos, the corporation in charge of space activities; it has two dozen satellites in orbit, several of which have high orbital inclinations and are regularly used to improve geolocation accuracy at higher latitudes. Finally, Galileo is operated by the European Space Agency and has 30 satellites up in the air including the back-ups. It started providing full service in 2018 and has a standard accuracy of about 1m.

d) The rise of online map applications

Highly precise positioning and time synchronization will be an important feature in the upcoming development of autonomous vehicles technology and roll out of the related infrastructure. This will be complemented by digital maps of the physical road and road-side networks; in fact those are already pretty advanced as any user of Google Maps or other online map applications can attest. So, back to the present.

I have chosen this topic as a very tangible example of the emergence of new functionalities permitted by the smartphone and internet connectivity with some profound shifts in user behaviours. Hence, I will not go into the technological aspects of mapmaking and information transfer and will instead list four key aspects of these online apps.

The first is their convenience, a mobile phone is not space consuming and, anyways, you already have it with you for other purposes so no extra device or paper map is required. Furthermore, just like an e-reader can store hundreds of books, an online map app provides its users with nearly every street or road maps they could wish for, with rare exceptions pertaining to remote corners of the world.

The second is you don’t need to buy new online maps to access an updated version. Actually, you don’t even need to purchase a map in the first place but don’t worry, they make money of us through advertisement, so it is left-pocket Vs right-pocket and thus I don’t really count the zero price as a major factor. Some map apps can even be updated regularly thanks to inputs from users themselves, thus allowing for quite reliable data, which can become problematic when we assume the data is always correct.

Number three in the short list is the addition of images providing users with the ability to visualize buildings, landmarks and landscapes rather than being limited to abstract intersecting lines. This helps checking you are in the right location, arranging convenient meeting points as well as explore potential locations you may want to visit. Often, it won’t result in a “I want to go there” type of outcome but rather the opposite “well, maybe that is not such a good idea”, therefore helping to narrow down options by a processus of elimination. This does work quite well.

Fourth and last, it facilitates navigation in two different respects. If you are behind the wheel, it works like a “standard” satellite navigation system. However, in addition, it can help you compare itinerary and travel time (and cost) options between various modes of transport such as car, bus or rail, making it much more convenient to find out which public transport services to take and when the next one is due at the most convenient stop for you, a process which relies on APIs, as we saw in S4 Section 3.e.

e) Trivia – Biometric identification

Our smartphones are equipped with more and more sensors and on most models, at least one of them is tasked with user authentication for the purposes of unlocking the device itself or make certain transactions, payments and money transfers in particular. This type of sensors are not solely present on smartphones and can be found in locations where some type of identification is required, such as airport immigration. The overall concept is known as biometric identification and relies on the fact that, as complex biological entities with non-identical genomes, we each have some unique visible patterns or phenotypes. There are several solutions one can come across so I will content myself with quickly describing the three main ones, namely fingerprint ID, iris recognition and face recognition.

Our fingers are very useful to grasp objects as well as to feel texture, this was highlighted in S2 Section 10.a on the sense of touch. To enhance our perception of texture, evolution (blindly) landed on slightly raised skin lines called friction ridges and their exact pattern is unique, similar yes but distinct from one individual to the next. Because glands in our skin produce natural oil that seals-in moisture, we leave a negative image of our fingerprints on objects with certain types of surfaces and this can be detected through digital imaging or recovered physically. For purpose of identification, a user would first record his unique fingerprint pattern and this would then be checked each time authentication is required in the future.

For iris recognition, the registration and check logic is identical to fingerprints but some of the advantages of this method include low false-match rates, the absence of physical contact for identification and the relative predictability of the iris shape compared to a face for instance. Shape doesn’t mean pattern and it has a bearing in the process of automatically extracting the right information after the picture acquisition. Irises are not just complex and unique; they are uniquely complex. Indeed, an iris has pigmentation, rings, connective tissue and other features which, taken together, combine to create patterns with an incredibly high amount of information.

In comparison to iris recognition, machines have a harder time distinguishing human faces from one another. It may be easy for us, despite changes in illumination and expressions, thanks to a neural circuitry developed over millions of years, not so for machines – something we previously highlighted in S2 Section 7.d on the process of visual perception. Face detection doesn’t truly consist in matching faces, be it one previously recorded version of yours on your personal computer/smartphone or against a database of backlisted individuals, but in matching feature vectors of your face, such as proportions within and distances between different elements of your face. Before the matching can be done, the machine will detect what part of the input is the face, adjust for lighting and orientation (the pose), and then detect edges to extract the facial features.

f) Further reading (S4C9)

Suggested reads:

- Wikipedia on System on a chip: https://en.wikipedia.org/wiki/System_on_a_chip

- Wikipedia on Gyroscope: https://en.wikipedia.org/wiki/Gyroscope

- Wikipedia on Hyperbolic navigation: https://en.wikipedia.org/wiki/Hyperbolic_navigation

Previous Chapter: The Television

Next Chapter: The Watch