>>> Click here to access this episode of the Syllab Podcast on Spotify <<<

a) Purpose and challenges of the visual system

You are reading those lines and can successfully describe the objects present in a room provided it is sufficiently illuminated. You can also tell where in this room you and each of those items are located. And if you go outside, you will see other people, animals or vehicles go past and be able to avoid them. All this is made possible mainly on the back of visual perception, arguably a lynchpin in our ability to understand the world around us and carry out crucial actions in relation with our everyday survival and long-term success as individual organisms. Were your visual system to be significantly impaired, you might still be able to carry out many tasks but only either partially or less quickly.

To quote from my book Higher Orders (Chapter 14): “vision is the sense animals most rely on to navigate the world, certainly the result of the wealth of information it provides about the world around us: content, position and motion.” The evolutionary fitness advantage provided by sight is unparalleled among the sensory systems tasked with the gathering of information from the outside world though, in order of importance for survival, it probably takes second seat to the inward looking systems garnering data about the internal state of an organism, thus allowing it to maintain homeostasis and keep on living minute after minute – we will explore interoception in Chapter 10, the catch-all name for the creation and processing of data within our body, in conclusion of this second series. The relative superiority of sight compared to other senses such as hearing or touch lies in its ability to convey a lot more information from a wide three-dimensional area, including natural features, objects or organisms fairly distant from the recipient of the information. All this data can then be analysed and integrated, running through the various algorithms of our brain, in order to optimize our next action.

Hearing the sound of movements is not always sufficient for identification and tell the difference between what can be ignored, a prey or a predator; touching a predator isn’t a recipe for survival; smelling provides little clue about motion and the lay of the land; and tasting doesn’t help face external threats or finding a mate for reproduction though olfaction plays a part in this. And none of those senses would allow us to decipher the facial expressions and other behavioural clues of other organisms within the tribe, a crucial skill in being accepted, maintaining our place in the social hierarchy and reproducing. On that basis, we should perhaps expect vision to have been the first sense to have evolved after interoception and yet this honour belongs to taste and smell. Why is that? After the first six chapters of this Series 2 as well as the content of Series 1, the answer should be straightforward: vision is extremely complex and would have taken a lot more time to evolve as compared to smell and taste; those will be the topic of Chapter 8.

One may be tempted to ask what makes vision so complex and therefore so challenging to come about and fine tune to the degree of usefulness it has reached in humans. To which it is very tempting to answer “just about everything” when we consider the real world is made of forces and perhaps elementary particles, and nothing else. This implies natural features, objects or organisms, are only defined as molecules or macromolecules bound together by intermolecular forces and the atoms they consist of are essentially empty volumes with at best point-like particles we are unable to distinguish for lack of adequate resolution with additional limitations due to the size of electromagnetic waves, what we call light. Consequently, what we are truly asking from our organism is to garner data from light and interpret it in a manner allowing our central nervous system to make sense of it, even though it doesn’t correspond to the reality.

Attaining this objective requires a 4-step process:

- Collecting raw data from the surrounding electromagnetic waves or photons;

- Transducing this data so it can travel as signals along our neural circuitry;

- A perception process enabling us to make sense of this information; and

- A mental representation of this information, being the image we “see”.

This is why vision is such a complex system requiring thousands upon thousands of mutations and could not possibly have evolved first among our senses.

We’ll tackle the four steps in order in the next sections.

b) Optical harvesting of light

The brain is protected within our skull, literally in the dark, so it is not exposed to any direct external stimulus. All information is channelled to dedicated areas within the central nervous system for processing via nerves, that is series of neurons, extending all the way to dedicated organs and very specific receptors located within those. In the case of sight, these organs are our eyes and their role is to harvest light and do so in a manner that embeds as much information as possible, namely position, wavelength, and intensity or brightness.

The apparatus starts with the cornea which refracts light, thus changing the angle at which light travels so it can be focused on the retina, at the back of our eye. In between, just behind the cornea, is the lens which enhances the refraction of light so that, together with the cornea, the optical power of the human eye is about 60 dioptres, meaning that parallel rays would after such refraction intersect 1/60 of a metre (~1.7cm) behind the cornea & lens combo. This dioptre unit is the one used by eye care professionals to measure the required optical correction of your prescription glasses.

To control the amount of light reaching the retina, our iris controls the size of the opening situated between the cornea and the lens called the pupil. The iris is where the eye-colour pigments are located and when the pupil dilates it lets in more light, as happens in low-light environments – this is quite pronounced in animal species such as cats.

The retina of mammal species contains three types of photoreceptors: rods, cones and photosensitive ganglion cells combining proteins and light-sensitive molecules called chromophores. We’ll revert to those in the next section dealing with transduction but for our immediate purpose the degree of perceived brightness is a function of the quantity of light being absorbed by those photoreceptors and since this quantity varies depending on the extent to which the pupil is contracted, what we really perceive is relative brightness of one item compared to another in our visual field rather than an intrinsic, absolute intensity. In terms of function, the rods are the elements providing most of the information relating to brightness and they are also the most sensitive in low light conditions.

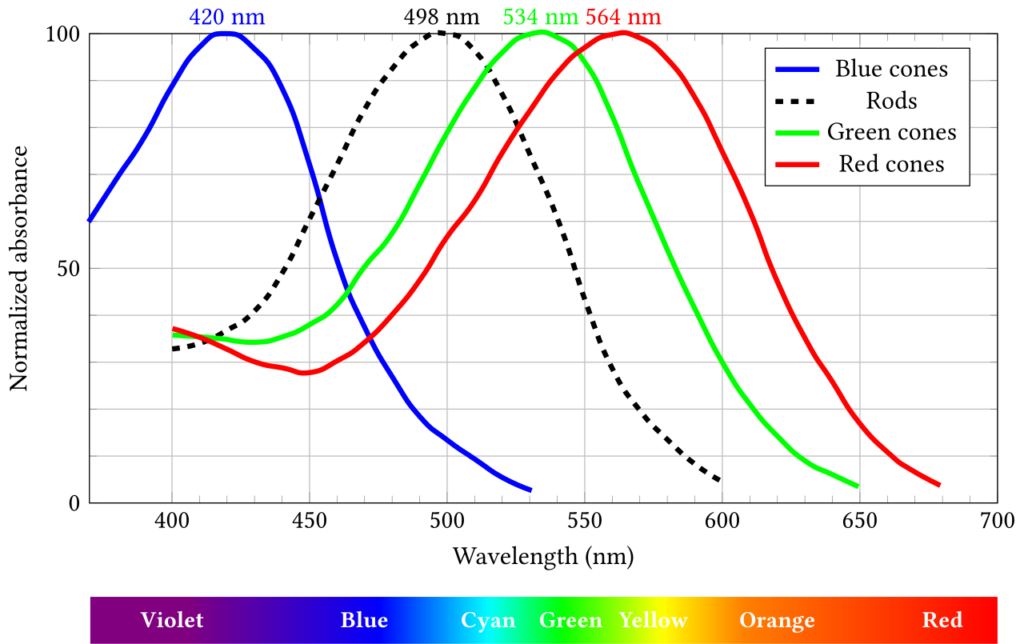

As for wavelength, it is not measured directly either. Nor would it make sense because in almost all natural instances light consists of EM waves or photons with different wavelengths. Instead, our retina is covered with three classes of the aforementioned cones, a type of photoreceptor, with each class being tuned to be sensitive to a narrow range of wavelength. This sensitivity range determines the visible light spectrum of each species and, in the case of humans, the range is 380-750 nanometres. The three classes are symbolized by the letters S, M, L with respective peaks at about 420 nm, 534 nm and 564nm as shown in Figure 4 below.

The light detected by those S, M, L cones is then construed as blue, green and red colours by our mind and the colour we see is a function of the ratio of light detected by each class. According to the diagram, given the proximity of the peaks of the M and L cones, the colour we call yellow corresponds to wavelengths in between green and red and is therefore the result of the activation of quite a few cones, therefore appearing to be brighter than other colours.

If you followed that logic, this implies colours are a mental construct and indeed they are. There is no such thing as colours in the real world, only electromagnetic waves with different wavelength or photons. This may not correspond to our direct experience of course but it should not be contentious when we remember the real world is essentially empty. More on this in section d).

Figure 4: Absorbance of human eye photoreceptors

Credit: Francois frwiki (CC BY_SA 4.0)

So we have covered brightness, wavelength and now we are left to understand how our visual system is able to extract information from the light hitting the retinas of our eyes to derive position. Recording information in terms of 2D coordinates seems comparatively easy, even if the lenses flip the light upside-down onto our retinas – nothing a little computation can’t work out – the relative positions of light sources remain intact. The biggest challenge is actually in transforming the two-dimensional nature of the data captured into a three-dimensional mental representation. To clarify this statement, imagine you are on a hike and chatting with your friend; there is a mountain in the background immediately behind her, thus some of the light she and the mountain reflect will hit the retina of your eyes in a continuous stream, without a time gap between the light reflected from the mountain and that reflected by your friend. There is therefore no concept of depth our photoreceptors can capture and our brain measure; it has to be inferred through other means. A clue to how this is achieved is the fact that we don’t only have one eye and our two eyes are not located on opposite sides of our head, which would have maximized the extent of our visual field. Instead, they are close to each other but sufficiently apart to allow for stereoscopic depth to be computed by our brain. In other words, it is the combination of the information provided by our left and right eyes, based on the slight difference in angles between our two eyes and the source of light emission. This is why it is so hard to work out depth with distant objects: the difference in angles becomes too narrow. The integration of data provided by the left and right eyes is further confirmed by the cabling of our visual system: there are two optic nerves linking each of our two retinas with our brain – so four nerves in total – and the signals corresponding to our right visual field are conveyed to our left hemisphere by two nerves coming from each eye, and conversely two nerves allow for the transmission of information relating to the left visual field to our right hemisphere.

c) Transduction of stimuli

As mentioned in the previous chapter on the central nervous system (S2 Section 6.b), the activation from sensory receptors is converted into signals travelling along the afferent neurons by way of electrical membrane potentials and neurotransmitters. This conversion process is called transduction and the specifics depend on the type of receptor but with the exception of the nociceptors we will discover in Chapter 10, the nature of the original external stimulus is either mechanical such as in sound waves or pressure on the skin, chemical in the case of hormones, taste and smell, and for vision it relies on light absorption by the photoreceptors.

Those receptors called opsins, or variations of it, are proteins to which light-sensitive molecules called chromophores are attached. Chlorophyll is perhaps the best-known of these and it underpins the process of photosynthesis, as described in S1 Section 7.f. The particularity of chromophores is their ability to absorb photons of particular frequency (which is 1/wavelength), the details are very technical and go back to electron orbitals and energy state, concepts that were discussed in S1 Section 2.c, but suffice to say the energy level discrimination allows for photoreceptors being activated by specific wavelengths and this activation is the direct result of the absorption of photons which alters the strength of bonds within the molecule, thus resulting in spatial rearrangements of atoms within the molecule.

This is only the beginning of a complex visual phototransduction process comprising a cascade of chemical reactions involving different proteins and nucleotides as well as the now familiar potassium and sodium channels with ensuing hyperpolarization or depolarization of membrane potentials. If you have not come across those terms and processes before, I invite you to read S2 Section 3.b on muscle contraction for the ion channels and S2 Section 6.a on the firing of neurons for the membrane potential. I also include a link to the Wikipedia entry for Visual Phototransduction at the end of this chapter.

Eventually, all the information collected by the photoreceptors and retinal ganglion cells travel to the optical nerve linking the two retinas with the brain. The nature of this information is electrical patterns, there is no light travelling through as mentioned earlier, and those signals are dispatched to various parts of the central nervous system for processing, interpretation, and rendering in the form of what we see.

Do not forget a nerve is not just one series of neurons and the optical nerve in humans generally contains in excess of 1 million nerve fibres. Consequently, the amount of information conveyed is truly comprehensive and all the more so when originating from the fovea, a small area in the middle of our retina that, although less than 2 mm wide, is connected to nearly half the nerve fibres of the optical nerve. It is thus responsible for providing high-resolution data from the very central part of our visual field, hence the unconscious darting movements of our eyes when we read to ensure the fovea is directly facing the object or the surface we seek to extract information from.

d) From raw data to visual perception

All this is already pretty impressive and yet the visual system is far from done; it is somehow able to model the world based on the packets of electrical signals it receives via the optical nerve. “Model” is a key word here because recall the reality of the outside world is nothing like what we see in our mind and the only reason we make such mental decoding, interpretation and representations is because they provide us with an edge in terms of survival and reproduction.

Based on the science to date, and it does make a lot of sense, the first step in the interpretation of data consists in edge detection. This really comes down to the computation of differentials in brightness between light data coming from adjacent points in space. However, it isn’t sufficient to measure those differentials and patterns have to be found, such as curves or lines, be they in a horizontal, vertical or diagonal position. This is still computing but there tends to be several options for our central nervous system to select from and it must choose among these. Since the purpose of vision is to attempt to make sense of our environment, then it stands to reason the choice is based on probabilities, reflecting the likelihood of what is out there based on what we already know and on context.

This is where I need to introduce the term of “concept” properly and I will include some quotes from Chapter 2 of Higher Orders on abstracting into concepts and later on from Chapter 3 on perception. A concept is not a fixed and immutable set of data, “the term involves a set of associations with other objects, shapes, colours, emotions, actions and observable phenomena.” We could visualize a concept “as a halo in a multi-dimensional space” with a centre of gravity denoting the quintessence of the concept and its halo overlapping with other concepts we could call proximal. Thus the concepts of cats and dogs have many similarities but their centre of gravity is different because they also have unique features. These concepts are probably encoded into specific neuron networks and activated by their synchronous firing.

You may be tempted to ask what the relevance of concepts is when discussing perception. The answer is simply that concepts serve as a guide, the embedding of the existing knowledge of the world on which our visual system relies to map the information it receives and thus infer what it is most likely seeing. This entails a bi-directionality or two-pronged build-up of perception: a bottom-up aspect starting from edge detection and progressing through to lines, textures, more complex shapes and finally identification of objects and features, and a concurrent top-down fitting of known concepts onto those intermediary levels of perception to guide the process towards a coherent output. In the book, I took the example of reading and the deciphering of the letter “N”, which would initially involve the seeing of two vertical lines and a diagonal one before coalescing into the symbol we know even though its exact shape differs depending on the font being used. In the same way, we see familiar objects or faces in the contours of cloud formations even though there is obviously only ice crystals and water droplets there.

This suggests there is almost always several plausible conceptual candidates among which our brain has to decide which one is the more likely to be out there. In that respect, context dramatically helps with assessing probabilities. “Circumstances do matter and a cat-like moving entity could be a tiger in a zoo or video game, but most-likely not in your backyard”. With all of this playing out being the scene, it is understandable why optical character recognition (OCR) and computer vision are tasks that appear easy on the face of it but are actually challenging to do with high accuracy.

When considering the sheer amount of incoming raw data from the optical nerve our brain has to sift through, it becomes evident processing the entire lot would both be computationally too expensive from an energy expenditure standpoint and possibly too slow, so we would always be lagging behind the facts as they develop, even if by a few tenths of a second.

Furthermore, since what we see is in any case our best interpretation of the outside world, there is room to significantly optimize the process by relying on a mix of prediction and error minimization. In layman terms, our brain partially makes up what it thinks is happening based on the data processed up to that point, this is the feedforward aspect, and it consistently trues-up and adjusts this mental model based on new data, what we call feedback. This understanding of the visual system is corroborated by various experiments and the nature of the nerve cabling between different parts of the brain.

In my personal opinion, this feedforward-feedback combo is the best available explanation for change blindness, even though it is seldom cast under this light. Not only that but we should fully expect change blindness to occur occasionally. Let me explain quickly. Change blindness is the failure of an individual to notice a change in a visual scene and it happens to all of us, to a certain degree. It is often explained as some kind of attention-deficit or visual attention being drawn in a direction away from the change and, in that sense, this is just another way of saying the feedback mechanism isn’t operating reliably: our mental model remains unaltered even though the actual scene no longer corresponds to it. However, once we notice the change, perhaps because it is pointed out to us, our mental projection is updated and remains so. This is also my favoured hypothesis to explain the difficulty in spotting our own writing and coding mistakes: our brain is running ahead so to speak and anticipating what it is reading rather than parsing the actual data. Of course, this hypothesis might just be off and yet, it makes complete sense so I am quite confident this is the central tenet of the physiological explanation.

e) The mystery of qualia

Finally, the last step in the vision process is the mental representation, the generation of the images we have in our mind’s eye. It truly feels like our eyes directly open onto the outside world and we perceive directly what is out there, nonetheless we also know full well this isn’t the case, the brain is sheltered inside our skull and there is no internal screen onto which pictures are being projected and watched by some soul-like entity.

Understanding the phenomenology enabling us to experience the world, be it through feelings, the hearing of sound, the sensing of pressure on our skin or the seeing of landscapes, essentially what defines us as sentient beings, is one of the last frontiers of sciences in the domain of biology. And it is a very perplexing one, so much so that I devoted two full chapters of Higher Orders to trying to progress our understanding of sentience (Chapter 11) and advance a phenomenological hypothesis behind qualia (Chapter 12).

Qualia is the phenomenon underlying our sensations, it is what makes us able to experience the colour blue, the bark of a dog, and the flutter of infatuation in our chest. It is “what allows us to experience the world in a way that doesn’t match the true nature of reality”.

There are various high-level hypotheses put forward to provide an explanation for this seemingly quirky phenomenon. I say “quirky” because indeed how can forces and particles result in us and other animals experiencing colours or feelings? But then, emerging properties of physics and chemistry are often obvious in retrospect and yet very difficult to anticipate at our level of intelligence. One theory called panpsychism stipulates that basic forms of consciousness reside in every particle or atom and although this reads like a promising start it completely lacks in details about how micro-level consciousness would be combined into complex organisms and result in our macro-level consciousness nor does it truly deal with qualia, somehow equating consciousness with it, which it isn’t. In short, it explains nothing and basically ignores the laws of physics. Another more scientific-sounding theory talks about information integration but likewise it doesn’t advance definite testable hypotheses, nor is it clear at all in its explanations.

In Chapter 12 of Higher Orders, I considered in turn every conceivable origin for qualia, including it being caused by a new force, a new property of matter and even a hidden dimension. Each time, the conclusion was unsatisfactory, not necessarily impossible, just highly implausible. When debating the merits of qualia being an emergent property of neural networks there seem to be aspects that truly fit the bill, in particular in relation with information storage and integration, except that the experiencing itself cannot occur at the network level as it would imply the existence of a soul-like entity, which was struck off as non-sensical earlier in the book mostly due to the issue of infinite regress (who or what would be doing the experiencing in this soul-like entity, and so on). Therefore, after exploring every possible process I could think of, my personal hypothesis is that qualia occurs within neurons and is experienced simultaneously across neuron networks, thus bypassing the need for a pre-experience integration. The fact this is not what it subjectively feels like is a moot point since our senses do not directly convey the reality, as we ought to be familiar with by now.

As you can tell, I am fascinated by qualia and would be very keen to unravel the mystery, both because it is interesting and because there would be many medical applications to these findings and related technologies.

f) Trivia – Vision in low-light conditions

Have you ever noticed that in very dim lighting it becomes hard to tell colours? The reason isn’t because it is dark, this should only impact brightness, the amount of light refracted onto the retinas, which is partially compensated for by the dilation of the pupils. Unlike qualia however, we have a good understanding of photoreceptors and can therefore explain vision in low-light conditions, what is called scotopic vision in the jargon whereas photopic vision occurs in well-lit conditions.

If you recall earlier sections, cones are the receptors that can detect colours and they are not being activated in low-light conditions. This leaves us with rods to convey the information to our brain. However, rods are sensitive to brightness and not to wavelengths, so the nature of the information being transduced lacks the data points enabling our central nervous system to derive colours for purpose of its mental representation (which involves the triggering of qualia).

Not only are humans essentially colour-blind in low light conditions but the resolution of our sight also drops on account of the density of nerve fibres linking brains and rods being lower than that for cones. All this points to the fact that we humans have not evolved to thrive in this type of environment and the species that did have much better scotopic vision, including the coloured-version for some. For instance, owls have higher density of rods compared to us (5 times as much) and very large eyes. However they have a comparatively weak photopic vision on account of a much lower density of cones.

g) Further reading (S2C7)

Suggested reads:

- Higher Orders, by Sylvain Labattu (buy)

- Wikipedia on Chromophore: https://en.wikipedia.org/wiki/Chromophore

- Wikipedia on Visual Phototransduction: https://en.wikipedia.org/wiki/Visual_phototransduction

Disclaimer: the links to books are Amazon Affiliate links so if you click and then purchase one of them, this makes no difference to you but I will earn a small commission from Amazon. Thank you in advance.

Previous Chapter: The Nervous System

Next Chapter: Olfaction & Taste